Faster Web vs. TCP Slow-Start

Ever obsess about trying to find the "best and fastest" internet provider? Having recently gone through the marketing vortex of comparing a dozen different plans, I was then reminded of a simple fact: the primary metric (bandwidth) used by the industry is actually highly misleading. It turns out, for most web-browsing use cases, an internet connection over several Mbps offers but a tiny improvement in performance.

Ever obsess about trying to find the "best and fastest" internet provider? Having recently gone through the marketing vortex of comparing a dozen different plans, I was then reminded of a simple fact: the primary metric (bandwidth) used by the industry is actually highly misleading. It turns out, for most web-browsing use cases, an internet connection over several Mbps offers but a tiny improvement in performance.

One of the main culprits is "TCP slow-start", and yes that is a feature, not a bug. To understand why, we have to look inside of the TCP stack itself, and in doing so we can also learn a few interesting tips for how to build faster web-services in general.

TCP Slow-start

TCP offers a dozen different built-in features, but the two we are interested in are congestion control and congestion avoidance. TCP slow-start specifically is an implementation of congestion control within the TCP layer:

Slow start is used in conjunction with other algorithms to avoid sending more data than the network is capable of transmitting, that is, to avoid causing network congestion.

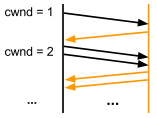

The high-level flow is simple: the client sends a SYN packet which advertises its maximum buffer size (rwnd - receive window), the sender replies by sending several packets back (cwnd - congestion window) and then each time it receives an ACK from the client, it doubles the number of packets that can be "on the wire" while unacknowledged.

The high-level flow is simple: the client sends a SYN packet which advertises its maximum buffer size (rwnd - receive window), the sender replies by sending several packets back (cwnd - congestion window) and then each time it receives an ACK from the client, it doubles the number of packets that can be "on the wire" while unacknowledged.

This phase is also known as the "exponential growth" phase of the TCP connection. So, why do we care? Well, no matter what the size of your pipe, every TCP connection goes through this phase, which also means that more often than not, the utilized bandwidth is effectively limited by the settings of the sender's and receiver's buffer sizes.

HTTP and TCP slow start

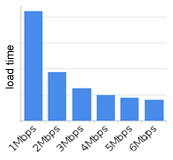

Of course, a higher bandwidth connection will help when you are streaming a large file, or running a vanity speed test. The problem is, HTTP traffic tends to make use of short and bursty connections - in these cases we often never even reach the full capacity of our pipes! Research done at Google shows that an increase from 5Mbps to 10Mbps results in a disappointing 5% improvement in page load times.

Or put slightly differently, a 10Mbps connection, on average uses only 16% of its capacity. Yikes! As it turns out, if we want faster internet, we should focus on cutting down the round-trip time between the client and server, not necessarily just investing in bigger pipes.

The story of CWND

If TCP slow start is, well, slow, then couldn't we just make it faster? Turns out, until very recently the Linux TCP stack itself was hardcoded to start with the congestion window (cwnd) of just 3 or 4 packets, which amounts to about 4kb (~1360 bytes per packet). Combine that with the unfortunately frequent pathological case of fetching a single resource per connection, and you have managed to severely limit your performance.

As of kernel version 2.6.39, following a protracted discussion and a number of IETF recommendations, the initial cwnd value has been reset to 10 packets, which in itself is a huge step forward. Only one problem, guess what kernel versions most servers run today? Yes, perhaps it is time to upgrade your servers.As a practical tip, if you have been thinking about enabling SPDY for your web-service, then running on anything but some of the latest kernels won't actually give you any performance improvements! A tiny change in the TCP stack, but a big difference overall.

As of kernel version 2.6.39, following a protracted discussion and a number of IETF recommendations, the initial cwnd value has been reset to 10 packets, which in itself is a huge step forward. Only one problem, guess what kernel versions most servers run today? Yes, perhaps it is time to upgrade your servers.As a practical tip, if you have been thinking about enabling SPDY for your web-service, then running on anything but some of the latest kernels won't actually give you any performance improvements! A tiny change in the TCP stack, but a big difference overall.

So what can I do about it?

TCP slow start is a feature, not a bug, and it does carry some interesting and important implications. As developers we often overlook the round trip time from the client to the server, but if we are truly interested in building a faster web, then this is a good time to investigate your options: re-use your TCP connections when talking to web-services, build web-services that support HTTP keep-alive and pipelining, and do think about end-to-end latency. Oh, and don't waste your money on that 10Mbps+ pipe, you probably don't need it.

Ilya Grigorik is a web ecosystem engineer, author of High Performance Browser Networking (O'Reilly), and Principal Engineer at Shopify — follow on

Ilya Grigorik is a web ecosystem engineer, author of High Performance Browser Networking (O'Reilly), and Principal Engineer at Shopify — follow on